Deep learning functions

Hi there,

the second part of the project is finishing. This period was quite interesting because I had to dive into the theory behind Neural Networks In particular [1], [2], [3] and [4] were very useful and I will sum up some concepts here below. On the other hand, coding became more challenging and the focus was on the python layer and in particular the way to structure the class in order to make everything scalable and generalizable. Summarizing the situation, in the first period I implemented all the Octave classes for the user interface. Those are Matlab compatible and they call some Python function in a seamless way. On the Python side, the TensorFlow API is used to build the graph of the Neural Network and perform training, evaluation and prediction.

I implemented the three core functions: trainNetwork, SeriesNetwork and trainingOptions. To do this, I used a Python class in which I initialize an object with the graph of the network and I store this object as attribute of SeriesNetwork. Doing that, I call the methods of this class from trainNetwork to perform the training and from predict/classify to perform the predictions. Since it was quite hard to have a clear vision of the situation, I used a Python wrapper (Keras) that allowed me to focus on the integration, "unpack" the problem and go forth "module" by "module". Now I am removing the dependency on the Keras library using directly the Tensorflow API. The code in my repo [5].

Since I have already explained in last posts how I structured the package, in this post I would like to focus on the theoretical basis of the deep learning functions used in the package. In particular I will present the available layers and the parameters that are available for the training.

Neurons

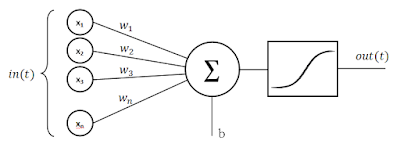

Let's start from the perceptron, that is the starting point for understanding neural networks and its components. A perceptron is simply a "node" that takes several binary inputs, $ x_1, x_2, ... $, and produces a single binary output:

The neuron's output, 0 or 1, is determined by whether the linear combination of the inputs $ \omega \cdot x = \sum_j \omega_j x_j $ is less than or greater than some threshold value. That is a simple mathematical model but is very versatile and powerful because we can combine many perceptrons and varying the weights and the threshold we can get different models. Moving the threshold to the other side of the inequality and replacing it by what's known as the perceptron's bias, b = −threshold, we can rewrite it as

Speaking about learning algorithms, the proceedings are simple: we suppose we make a small change in some weight or bias and what see the corresponding change in the output from the network. If a small change in a weight or bias causes only a small change in output, then we could use this fact to modify the weights and biases to get our network to behave more in the manner we want. The problem is that this isn't what happens when our network contains perceptrons since a small change of any single perceptron can sometimes cause the output of that perceptron to completely flip, say from 0 to 1. We can overcome this problem by introducing an activation function. Instead of the binary output we use a function depending on weights and bias. The most common is the sigmoid function:

Loss function

Let x be a training input and y(x) the desired output. What we'd like is an algorithm which lets us find weights and biases so that the output from the network approximates y(x) for all x. Most used loss function is mean squared error (MSE) :

To minimize the loss function, there are many optimizing algorithms. The one we will use is the gradient descend, of which every iteration of an epoch is defined as:

where m is the size of the batch of inputs with which we feed the network and $ \eta $ is the learning rate.

Backpropagation

The last concept that I would like to emphasize is the backpropagation. Its goal is to compute the partial derivatives$ \partial L / \partial \omega $ and $ \partial L / \partial b} $ of the loss function L with respect to any weight or bias in the network. The reason is that those partial derivatives are computationally heavy and the network training would be excessively slow.

Let be $ z^l $ the weighted input to the neurons in layer l, that can be viewed as a linear function of the activations of the previous layer: $ z^l = \omega^l a^{l-1} + b^l $ .

In the fundamental steps of backpropagation we compute:

1) the final error:

2) the error of every layer l:

3) the partial derivative of the loss function with respect to any bias in the net

4) the partial derivative of the loss function with respect to any weight in the net

We can therefore update the weights and the biases with the gradient descent and train the network. Since inputs can be too numerous, we can use only a random sample of the inputs. Stochastic Gradient Descent (SGD) simply does away with the expectation in the update and computes the gradient of the parameters using only a single or a few training examples. In particular, we will use the SGD with momentum, that is a method that helps accelerate SGD in the relevant direction and damping oscillations. It does this by adding a fraction γ of the update vector of the past time step to the current update vector.

Convolution2DLayer

The convolution layer is the core building block of a convolutional neural network (CNN) and it does most of the computational heavy lifting. They derive their name from the “convolution” operator. The primary purpose of convolution is to extract features from the input image preserving the spatial relationship between pixels by learning image features using small squares of input data.

In the example in Fig.2, the 3×3 matrix is called a 'filter' or 'kernel' and the matrix formed by sliding the filter over the image and computing the dot product is called the 'Convolved Feature' or 'Activation Map' (or the 'Feature Map'). In practice, a CNN learns the values of these filters on its own during the training process (although we still need to specify parameters such as number of filters, filter size, architecture of the network etc. before the training process). The more number of filters we have, the more image features get extracted and the better our network becomes at recognizing patterns in unseen images.

The size of the Feature Map depends on three parameters: the depth (that corresponds to the number of filters we use for the convolution operation), the stride (that is the number of pixels by which we slide our filter matrix over the input matrix) and the padding (that consists in padding the input matrix with zeros around the border).

ReLULayer

ReLU stands for Rectified Linear Unit and is a non-linear operation: $ f(x)=max(0,x) $. Usually this is applied element-wise to the output of some other function, such as a matrix-vector product. It replaces all negative pixel values in the feature map by zero with the purpose of introducing non-linearity in our network, since most of the real-world data we would want to learn would be non-linear.

FullyConnectedLayer

Neurons in a fully connected layer have full connections to all activations in the previous layer, as seen in regular Neural Networks. Hence their activations can be computed with a matrix multiplication followed by a bias offset. In our case, the purpose of the fully-connected layer is to use these features for classifying the input image into various classes based on the training dataset. Apart from classification, adding a fully-connected layer is also a cheap way of learning non-linear combinations of the features.

Pooling

It is common to periodically insert a pooling layer in-between successive convolution layers. Spatial Pooling (also called subsampling or downsampling) reduces the dimensionality of each feature map but retains the most important information. In particular, pooling

- makes the input representations (feature dimension) smaller and more manageable

- reduces the number of parameters and computations in the network, therefore, controlling overfitting

- makes the network invariant to small transformations, distortions and translations in the input image

- helps us arrive at an almost scale invariant representation of our image

Spatial Pooling can be of different types: Max, Average, Sum etc.

MaxPooling2DLayer

In case of Max Pooling, we define a spatial neighborhood (for example, a 2×2 window) and take the largest element from the rectified feature map within that window.

AveragePooling2DLayer

Instead of taking the largest element we could also take the average.

DropoutLayer

Dropout in deep learning works as follows: one or more neural network nodes is switched off once in a while so that it will not interact with the network. With dropout, the learned weights of the nodes become somewhat more insensitive to the weights of the other nodes and learn to decide somewhat more by their own. In general, dropout helps the network to generalize better and increase accuracy since the influence of a single node is decreased.

SoftmaxLayer

The purpose of the softmax classification layer is simply to transform all the net activations in your final output layer to a series of values that can be interpreted as probabilities. To do this, the softmax function is applied onto the net intputs.

CrossChannelNormalizationLayer

Local Response Normalization (LRN) layer implements the lateral inhibition that in neurobiology refers to the capacity of an excited neuron to subdue its neighbors. This layer is useful when we are dealing with ReLU neurons because they have unbounded activations and we need LRN to normalize that. We want to detect high frequency features with a large response. If we normalize around the local neighborhood of the excited neuron, it becomes even more sensitive as compared to its neighbors. At the same time, it will dampen the responses that are uniformly large in any given local neighborhood. If all the values are large, then normalizing those values will diminish all of them. So basically we want to encourage some kind of inhibition and boost the neurons with relatively larger activations.

the second part of the project is finishing. This period was quite interesting because I had to dive into the theory behind Neural Networks In particular [1], [2], [3] and [4] were very useful and I will sum up some concepts here below. On the other hand, coding became more challenging and the focus was on the python layer and in particular the way to structure the class in order to make everything scalable and generalizable. Summarizing the situation, in the first period I implemented all the Octave classes for the user interface. Those are Matlab compatible and they call some Python function in a seamless way. On the Python side, the TensorFlow API is used to build the graph of the Neural Network and perform training, evaluation and prediction.

I implemented the three core functions: trainNetwork, SeriesNetwork and trainingOptions. To do this, I used a Python class in which I initialize an object with the graph of the network and I store this object as attribute of SeriesNetwork. Doing that, I call the methods of this class from trainNetwork to perform the training and from predict/classify to perform the predictions. Since it was quite hard to have a clear vision of the situation, I used a Python wrapper (Keras) that allowed me to focus on the integration, "unpack" the problem and go forth "module" by "module". Now I am removing the dependency on the Keras library using directly the Tensorflow API. The code in my repo [5].

Since I have already explained in last posts how I structured the package, in this post I would like to focus on the theoretical basis of the deep learning functions used in the package. In particular I will present the available layers and the parameters that are available for the training.

Theoretical dive

I. Fundamentals

I want to start with a brief explanation about the perceptron and the back propagation, two key concepts in the artificial neural networks world.

Let's start from the perceptron, that is the starting point for understanding neural networks and its components. A perceptron is simply a "node" that takes several binary inputs, $ x_1, x_2, ... $, and produces a single binary output:

The neuron's output, 0 or 1, is determined by whether the linear combination of the inputs $ \omega \cdot x = \sum_j \omega_j x_j $ is less than or greater than some threshold value. That is a simple mathematical model but is very versatile and powerful because we can combine many perceptrons and varying the weights and the threshold we can get different models. Moving the threshold to the other side of the inequality and replacing it by what's known as the perceptron's bias, b = −threshold, we can rewrite it as

$ out = \bigg \{ \begin{array}{rl} 0 & \omega \cdot x + b \leq 0 \\ 1 & \omega \cdot x + b > 0 \\ \end{array} $

Using the perceptrons like artificial neurons of a network, it turns out that we can devise learning algorithms which can automatically tune the weights and biases. This tuning happens in response to external stimuli, without direct intervention by a programmer and this enables us to have an "automatic" learning.Speaking about learning algorithms, the proceedings are simple: we suppose we make a small change in some weight or bias and what see the corresponding change in the output from the network. If a small change in a weight or bias causes only a small change in output, then we could use this fact to modify the weights and biases to get our network to behave more in the manner we want. The problem is that this isn't what happens when our network contains perceptrons since a small change of any single perceptron can sometimes cause the output of that perceptron to completely flip, say from 0 to 1. We can overcome this problem by introducing an activation function. Instead of the binary output we use a function depending on weights and bias. The most common is the sigmoid function:

$ \sigma (\omega \cdot x + b ) = \dfrac{1}{1 + e^{-(\omega \cdot x + b ) } } $

With the smoothness of the activation function $ \sigma $ we are able to analytically measure the output changes since $ \Delta out $ is a linear function of the changes $ \Delta \omega $ and $ \Delta b$ :

$ \Delta out \approx \sum_j \dfrac{\partial out}{\partial \omega_j} \Delta \omega_j + \dfrac{\partial out}{\partial b} \Delta b $

Loss function

Let x be a training input and y(x) the desired output. What we'd like is an algorithm which lets us find weights and biases so that the output from the network approximates y(x) for all x. Most used loss function is mean squared error (MSE) :

$ L( \omega, b) = \dfrac{1}{2n} \sum_x || Y(x) - out ||^2 $ ,

where n is the total number of training inputs, out is the vector of outputs from the network when x is input. To minimize the loss function, there are many optimizing algorithms. The one we will use is the gradient descend, of which every iteration of an epoch is defined as:

$ \omega_k \rightarrow \omega_k' = \omega_k - \dfrac{\eta}{m} \sum_j \dfrac{\partial L_{X_j}}{\partial \omega_k} $

$ b_k \rightarrow b_k' = b_k - \dfrac{\eta}{m} \sum_j \dfrac{\partial L_{X_j}}{\partial b_k} $

where m is the size of the batch of inputs with which we feed the network and $ \eta $ is the learning rate.

Backpropagation

The last concept that I would like to emphasize is the backpropagation. Its goal is to compute the partial derivatives$ \partial L / \partial \omega $ and $ \partial L / \partial b} $ of the loss function L with respect to any weight or bias in the network. The reason is that those partial derivatives are computationally heavy and the network training would be excessively slow.

Let be $ z^l $ the weighted input to the neurons in layer l, that can be viewed as a linear function of the activations of the previous layer: $ z^l = \omega^l a^{l-1} + b^l $ .

In the fundamental steps of backpropagation we compute:

1) the final error:

$ \delta ^L = \Delta_a L \odot \sigma' (z^L) $

The first term measures how fast the loss is changing as a function of every output activation and the second term measures how fast the activation function is changing at $ z_L $

2) the error of every layer l:

$ \delta^l = ((\omega^{l+1})^T \delta^{l+1} ) \odot \sigma' (z^l) $

$ \dfrac{\partial L}{\partial b^l_j} = \delta^l_j $

$ \dfrac{\partial L}{\partial \omega^l_{jk}} = a_k^{l-1} \delta^l_j $

II. Layers

Here a brief explanation of the functions that I am considering in the trainNetwork classConvolution2DLayer

The convolution layer is the core building block of a convolutional neural network (CNN) and it does most of the computational heavy lifting. They derive their name from the “convolution” operator. The primary purpose of convolution is to extract features from the input image preserving the spatial relationship between pixels by learning image features using small squares of input data.

|

| Figure 2. Feature extraction with convolution (image taken form https://goo.gl/h7pXxf) |

The size of the Feature Map depends on three parameters: the depth (that corresponds to the number of filters we use for the convolution operation), the stride (that is the number of pixels by which we slide our filter matrix over the input matrix) and the padding (that consists in padding the input matrix with zeros around the border).

ReLULayer

ReLU stands for Rectified Linear Unit and is a non-linear operation: $ f(x)=max(0,x) $. Usually this is applied element-wise to the output of some other function, such as a matrix-vector product. It replaces all negative pixel values in the feature map by zero with the purpose of introducing non-linearity in our network, since most of the real-world data we would want to learn would be non-linear.

Neurons in a fully connected layer have full connections to all activations in the previous layer, as seen in regular Neural Networks. Hence their activations can be computed with a matrix multiplication followed by a bias offset. In our case, the purpose of the fully-connected layer is to use these features for classifying the input image into various classes based on the training dataset. Apart from classification, adding a fully-connected layer is also a cheap way of learning non-linear combinations of the features.

Pooling

It is common to periodically insert a pooling layer in-between successive convolution layers. Spatial Pooling (also called subsampling or downsampling) reduces the dimensionality of each feature map but retains the most important information. In particular, pooling

- makes the input representations (feature dimension) smaller and more manageable

- reduces the number of parameters and computations in the network, therefore, controlling overfitting

- makes the network invariant to small transformations, distortions and translations in the input image

- helps us arrive at an almost scale invariant representation of our image

Spatial Pooling can be of different types: Max, Average, Sum etc.

MaxPooling2DLayer

In case of Max Pooling, we define a spatial neighborhood (for example, a 2×2 window) and take the largest element from the rectified feature map within that window.

AveragePooling2DLayer

Instead of taking the largest element we could also take the average.

DropoutLayer

Dropout in deep learning works as follows: one or more neural network nodes is switched off once in a while so that it will not interact with the network. With dropout, the learned weights of the nodes become somewhat more insensitive to the weights of the other nodes and learn to decide somewhat more by their own. In general, dropout helps the network to generalize better and increase accuracy since the influence of a single node is decreased.

The purpose of the softmax classification layer is simply to transform all the net activations in your final output layer to a series of values that can be interpreted as probabilities. To do this, the softmax function is applied onto the net intputs.

$ \phi_{softmax} (z^i) = \dfrac{e^{z^i}}{\sum_{j=0}^k e^{z_k^i}} $

CrossChannelNormalizationLayer

Local Response Normalization (LRN) layer implements the lateral inhibition that in neurobiology refers to the capacity of an excited neuron to subdue its neighbors. This layer is useful when we are dealing with ReLU neurons because they have unbounded activations and we need LRN to normalize that. We want to detect high frequency features with a large response. If we normalize around the local neighborhood of the excited neuron, it becomes even more sensitive as compared to its neighbors. At the same time, it will dampen the responses that are uniformly large in any given local neighborhood. If all the values are large, then normalizing those values will diminish all of them. So basically we want to encourage some kind of inhibition and boost the neurons with relatively larger activations.

III. training options

The training function takes as input a trainingOptions object that contains the parameters for the training. A brief explanation:

SolverName

Optimizer chosen for minimize the loss function. To guarantee the Matlab compatibility, only the Stochastic Gradient Descent with Momentum ('sgdm') is allowed

Momentum

Parameter for the sgdm: it corresponds to the contribution of the previous step to the current iteration

InitialLearnRate

Initial learning rate η for the optimizer

LearnRateScheduleSettings

These are the settings for regulating the learning rate. It is a struct containing three values:

RateSchedule: if it is set to 'piecewise', the learning rate will drop of a RateDropFactor every a RateDropPeriod number of epochs.

L2Regularization

Regularizers allow to apply penalties on layer parameters or layer activity during optimization. This is the factor of the L2 regularization.

MaxEpochs

Number of epochs for training

Verbose

Display the information of the training every VerboseFrequency iterations

Shuffle

Random shuffle of the data before training if set to 'once'

CheckpointPath

Path for saving the checkpoints

ExecutionEnvironment

Chose of the hardware for the training: 'cpu', 'gpu', 'multi-gpu' or 'parallel'. the load is divided between workers of GPUs or CPUs according to the relative division set by WorkerLoad

OutputFcn

Custom output functions to call during training after each iteration passing a struct containing:

Current epoch number, Current iteration number, TimeSinceStart, TrainingLoss, BaseLearnRate, TrainingAccuracy (or TrainingRMSE for regression), State

Peace,

Enrico

[1] [Ian Goodfellow, Yoshua Bengio, Aaron Courville] Deep Learning, MIT Press, http://www.deeplearningbook.org, 2016

[2] http://ufldl.stanford.edu/tutorial/supervised/ConvolutionalNeuralNetwork/

[3] http://web.stanford.edu/class/cs20si/syllabus.html

[4] http://neuralnetworksanddeeplearning.com/

[5] https://bitbucket.org/cittiberto/octave-nnet/src

SolverName

Optimizer chosen for minimize the loss function. To guarantee the Matlab compatibility, only the Stochastic Gradient Descent with Momentum ('sgdm') is allowed

Momentum

Parameter for the sgdm: it corresponds to the contribution of the previous step to the current iteration

InitialLearnRate

Initial learning rate η for the optimizer

LearnRateScheduleSettings

These are the settings for regulating the learning rate. It is a struct containing three values:

RateSchedule: if it is set to 'piecewise', the learning rate will drop of a RateDropFactor every a RateDropPeriod number of epochs.

L2Regularization

Regularizers allow to apply penalties on layer parameters or layer activity during optimization. This is the factor of the L2 regularization.

MaxEpochs

Number of epochs for training

Verbose

Display the information of the training every VerboseFrequency iterations

Shuffle

Random shuffle of the data before training if set to 'once'

CheckpointPath

Path for saving the checkpoints

ExecutionEnvironment

Chose of the hardware for the training: 'cpu', 'gpu', 'multi-gpu' or 'parallel'. the load is divided between workers of GPUs or CPUs according to the relative division set by WorkerLoad

OutputFcn

Custom output functions to call during training after each iteration passing a struct containing:

Current epoch number, Current iteration number, TimeSinceStart, TrainingLoss, BaseLearnRate, TrainingAccuracy (or TrainingRMSE for regression), State

Peace,

Enrico

[1] [Ian Goodfellow, Yoshua Bengio, Aaron Courville] Deep Learning, MIT Press, http://www.deeplearningbook.org, 2016

[2] http://ufldl.stanford.edu/tutorial/supervised/ConvolutionalNeuralNetwork/

[3] http://web.stanford.edu/class/cs20si/syllabus.html

[4] http://neuralnetworksanddeeplearning.com/

[5] https://bitbucket.org/cittiberto/octave-nnet/src

This was the best attempt to update nnet on Octave-package. Looking forward to try DNN on this package on September, isn't it...?

ReplyDeleteMore probable in November/December :)

Delete