Summary of work done during GSoC

GSoC17 is at the end and I want to thank my mentors and the Octave community for giving me the opportunity to participate in this unique experience.

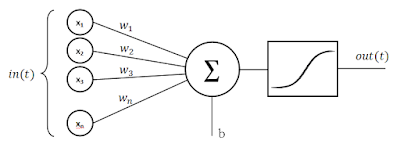

During this Google Summer of Code, my goal was to implement from scratch the Convolutional Neural Networks package for GNU Octave. It will be integrated with the already existing nnet package.

This was a very interesting project and a stimulating experience for both the implemented code and the theoretical base behind the algorithms treated. A part has been implemented in Octave and an other part in Python using the Tensorflow API.

https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/all

(my username: citti berto, bookmark enrico)

Since I implemented a completely new part of the package, I pushed the entire project in three commits and I wait for the community approving for preparing a PR for the official package [1].

The second commit (479ecc5 [4]) is about the Python part, including an init file checking the Tensorflow installation. I implemented a Python module, TFintefrace, which includes:

The third commit (e7201d8 [6]) includes:

### TRAINING ###

# Load the training set

[XTrain,TTrain] = digitTrain4DArrayData();

# Define the layers

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,'Stride',2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

# Define the training options

options = trainingOptions('sgdm', 'MaxEpochs', 15, 'InitialLearnRate', 0.04);

# Train the network

net = trainNetwork(XTrain,TTrain,layers,options);

### TESTING ###

# Load the testing set

[XTest,TTest]= digitTest4DArrayData();

# Predict the new labels

YTestPred = classify(net,XTest);

Repo link: https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/all

[1] https://sourceforge.net/p/octave/nnet/ci/default/tree/

[2] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/ade115a0ce0c80eb2f617622d32bfe3b2a729b13

[3] https://it.mathworks.com/help/nnet/classeslist.html?s_cid=doc_ftr

[4] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/479ecc5c1b81dd44c626cc5276ebff5e9f509e84

[5] https://github.com/tensorflow/tensorflow/tree/master/tensorflow/examples/tutorials/deepdream

[6] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/e7201d8081ca3c39f335067ca2a117e7971b5087

[7] https://www.tensorflow.org/install/

[8] https://bitbucket.org/mtmiller/pytave

[9] https://www.gnu.org/software/octave/doc/interpreter/Installing-and-Removing-Packages.html

[10] https://www.gnu.org/software/octave/doc/v4.0.1/Using-Packages.html

During this Google Summer of Code, my goal was to implement from scratch the Convolutional Neural Networks package for GNU Octave. It will be integrated with the already existing nnet package.

This was a very interesting project and a stimulating experience for both the implemented code and the theoretical base behind the algorithms treated. A part has been implemented in Octave and an other part in Python using the Tensorflow API.

Code repository

All the code implemented during these months can be found in my public repository:https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/all

(my username: citti berto, bookmark enrico)

Since I implemented a completely new part of the package, I pushed the entire project in three commits and I wait for the community approving for preparing a PR for the official package [1].

Summary

The first commit (ade115a, [2]) contains the layers. There is a class for each layer, with a corresponding function which calls the constructor. All the layers inherit from a Layer class which lets the user create a layers concatenation, that is the network architecture. Layers have several parameters, for which I have guaranteed the compatibility with Matlab [3].The second commit (479ecc5 [4]) is about the Python part, including an init file checking the Tensorflow installation. I implemented a Python module, TFintefrace, which includes:

- layer.py: an abstract class for layers inheritance

- layers/layers.py: the layer classes that are used to add the right layer to the TF graph

- dataset.py: a class for managing the datasets input

- trainNetwork.py: the core class, which initiates the graph and the session, performs the training and the predictions

- deepdream.py: a version of [5] for deepdream implementation (it has to be completed)

The third commit (e7201d8 [6]) includes:

- trainingOptions: All the options for the training. Up to now, the only optimizer available is the stochastic gradient descent with momentum (sgdm) implemented in the class TrainingOptionsSGDM.

- trainNetwork: passing the data, the architecture and the options, this function performs the training and returns a SeriesNetwork object

- SeriesNetwork: class that contains the trained network, including the Tensorflow graph and session. This has three methods

- predict: predicting scores for regression problems

- classify: predicting labels for classification problems

- activations: getting the output of a specific layer of the architecture

Goals not met

I did not manage to implement some features because of the lack of time due to the bug fixing in the last period. The problem was the conspicuous time spent testing the algorithms (because of the different random generators between Matlab, Octave and Python/Tensorflow). I will work in the next weeks to implement the missing features and I plan to continue to contribute to maintaining this package to keep it up to date with both Tensorflow new versions and Matlab new feature.| Function | Missing features |

|---|---|

| activations | OutputAs (for changing output format) |

| imageInputLayer | DataAugmentation and Normalization |

| trainNetwork | Accepted inputs: imds or tbl |

| trainNetwork | .mat Checkpoints |

| trainNetwork | ExecutionEnvironment: 'multi-gpu' and 'parallel' |

| ClassificationOutputLayer | classnames |

| TrainingOptions | WorkerLoad and OutputFcn |

| DeepDreamImages | Generalization to any network and AlexNet example |

Tutorial for testing the package

- Install Python Tensorflow API (as explained in [4])

- Install Pytave (following these instructions [5])

- Install nnet package (In Octave: install [6] and load [7])

- Check the package with make check PYTAVE="pytave/dir/"

- Open Octave, add the Pytave dir the the paths and run your first network:

### TRAINING ###

# Load the training set

[XTrain,TTrain] = digitTrain4DArrayData();

# Define the layers

layers = [imageInputLayer([28 28 1]);

convolution2dLayer(5,20);

reluLayer();

maxPooling2dLayer(2,'Stride',2);

fullyConnectedLayer(10);

softmaxLayer();

classificationLayer()];

# Define the training options

options = trainingOptions('sgdm', 'MaxEpochs', 15, 'InitialLearnRate', 0.04);

# Train the network

net = trainNetwork(XTrain,TTrain,layers,options);

### TESTING ###

# Load the testing set

[XTest,TTest]= digitTest4DArrayData();

# Predict the new labels

YTestPred = classify(net,XTest);

Future improvements

- Manage the session saving

- Save the checkpoints as .mat files and not as TF checkpoints

- Optimize array passage via Pytave

- Categorical variables for classification problems

Links

Repo link: https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/all

[1] https://sourceforge.net/p/octave/nnet/ci/default/tree/

[2] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/ade115a0ce0c80eb2f617622d32bfe3b2a729b13

[3] https://it.mathworks.com/help/nnet/classeslist.html?s_cid=doc_ftr

[4] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/479ecc5c1b81dd44c626cc5276ebff5e9f509e84

[5] https://github.com/tensorflow/tensorflow/tree/master/tensorflow/examples/tutorials/deepdream

[6] https://bitbucket.org/cittiberto/gsoc-octave-nnet/commits/e7201d8081ca3c39f335067ca2a117e7971b5087

[7] https://www.tensorflow.org/install/

[8] https://bitbucket.org/mtmiller/pytave

[9] https://www.gnu.org/software/octave/doc/interpreter/Installing-and-Removing-Packages.html

[10] https://www.gnu.org/software/octave/doc/v4.0.1/Using-Packages.html

I just follow the install instruction and I got the following error when running the first line.

ReplyDelete>> [XTrain,TTrain] = digitTrain4DArrayData();

error: load: unable to find file private/Digits_TRAIN.mat

error: called from

digitTrain4DArrayData at line 31 column 7

I checked there is no Digits_TRAIN.mat file in the repository.

This comment has been removed by the author.

ReplyDeleteSir are you still working on this project. I would really like to contribute in this.

ReplyDelete